Designed for large-scale AI infrastructure, the GB200 NVL72 integrates 72 Blackwell GPUs using NVIDIA’s NVLink fabric and advanced direct-to-chip liquid cooling (DLC). It supports demanding workloads including large-scale model training, real-time LLM inference, HPC simulations, media processing, and data-intensive analytics.

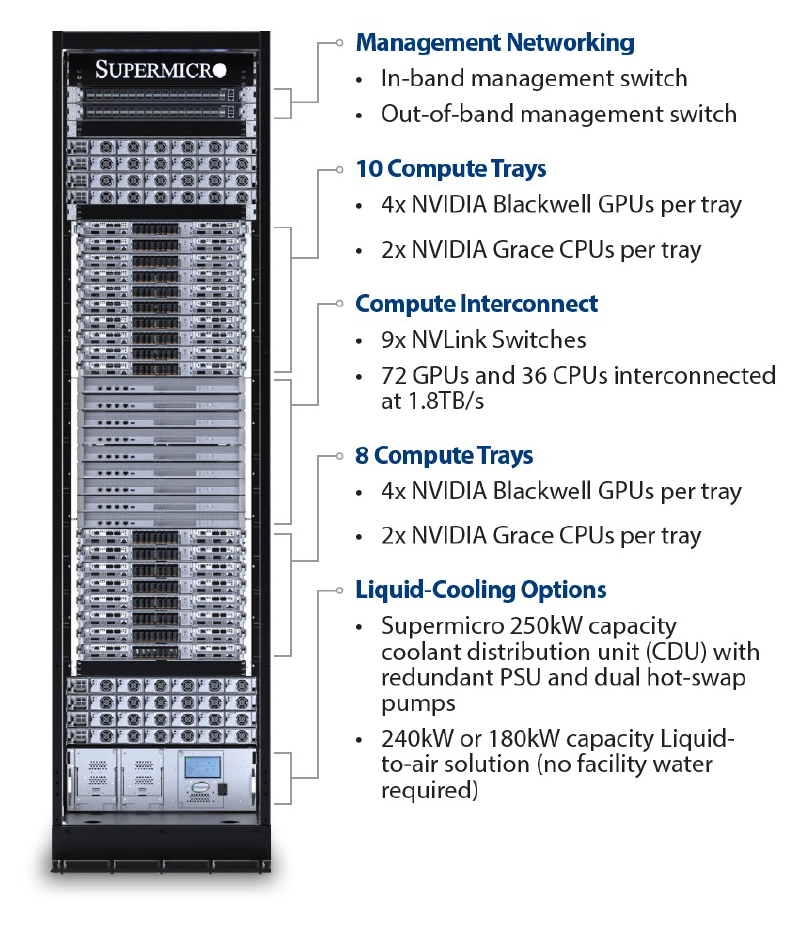

At its core, the NVL72 features 36 Grace CPUs and 72 Blackwell GPUs integrated into a unified, liquid-cooled rack-scale system. Powered by NVIDIA GB200 Superchips and full NVLink connectivity, it delivers up to 30× faster inference for trillion-parameter models—functioning as a single, high-performance GPU.

Real-time trillion-parameter LLM inference

Massive-scale LLM training

Real-time generative AI

High-performance database acceleration

Scientific computing & simulation

AI-powered video & image generation

NVL72 is purpose-built for liquid-cooled operation, featuring custom cold plates and a high-capacity in-rack coolant distribution unit (CDU) capable of handling up to 250kW of thermal load. Its direct-to-chip design helps eliminate thermal bottlenecks and cuts electricity usage by as much as 40%—ideal for demanding AI and HPC environments. For data centers without chilled water infrastructure, optional liquid-to-air solutions offer a flexible, high-efficiency alternative.

Selecting the NVL72 through Thinkmate means working with a team that understands the complexity of AI infrastructure at scale. We provide expert guidance during system sizing and configuration, align deployment with your facility’s technical constraints, and offer long-term support to ensure sustained performance across evolving workloads.