Accelerating the training and inference processes of deep learning models is crucial for unleashing their true potential and NVIDIA GPUs have emerged as a game-changing technology in this regard.

In this blog, we will look at the newer L40S GPU from NVIDIA—available immediately—and compare it to the NVIDIA A100 GPU. With lead times for the A100 ranging from 30-52 weeks, many organizations are looking at the L40S as a viable alternative.

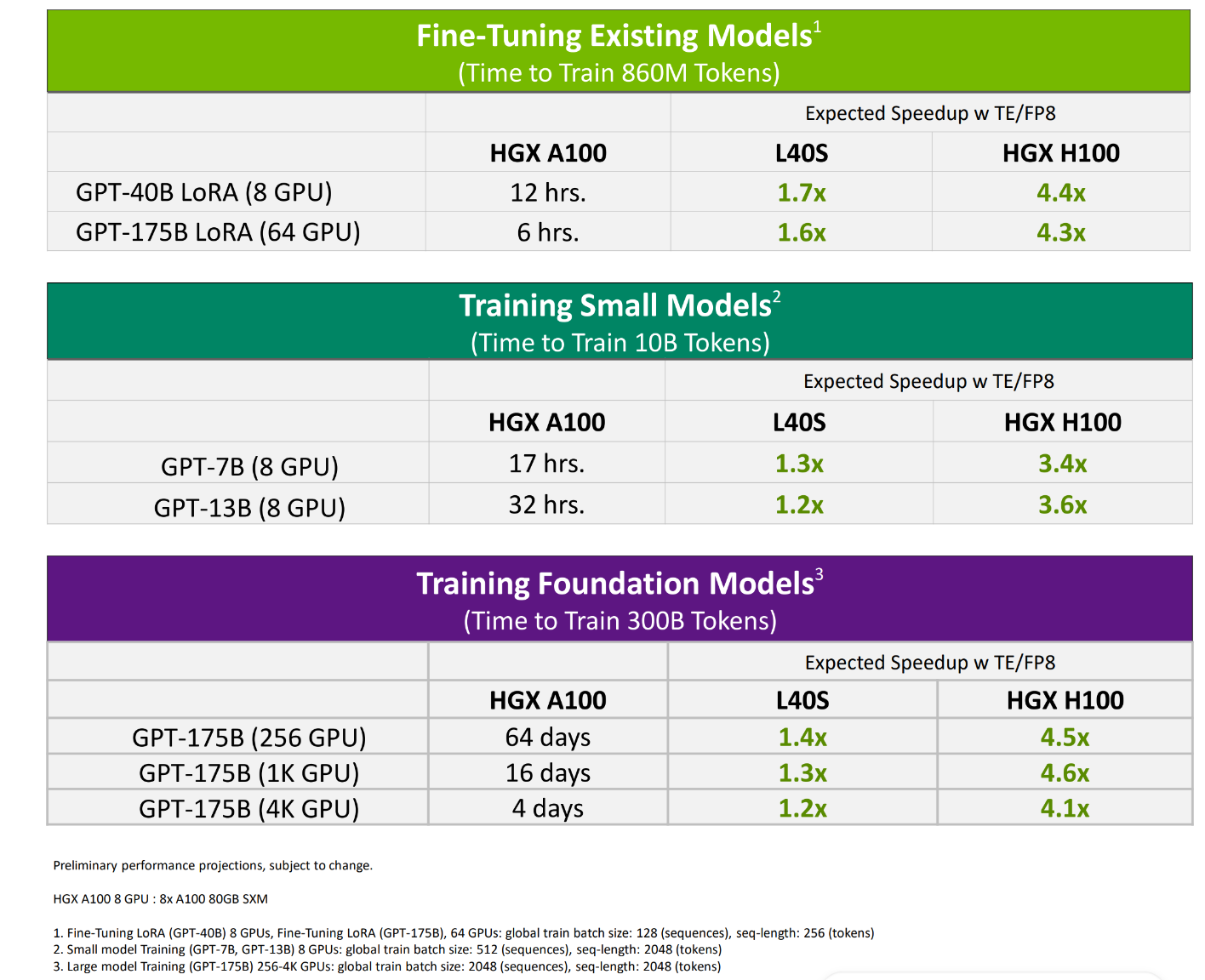

The L40S can accelerate AI training and inference workloads and is an excellent solution for fine tuning, training small models and small/mid-scale training up to 4K GPU. See chart below for performance estimations of the A100 vs. L40S.

There is much more performance data available (e.g., measure performance, MLPerf Benchmark), so please contact tmsales@thinkmate.com if you’d like further details.

Considering the memory and bandwidth capabilities of both GPUs is essential to accommodate the requirements of your specific LLM inference and training workloads. Determining the size of your datasets, the complexity of your models, and the scale of your projects will guide you in selecting the GPU that can ensure smooth and efficient operations.

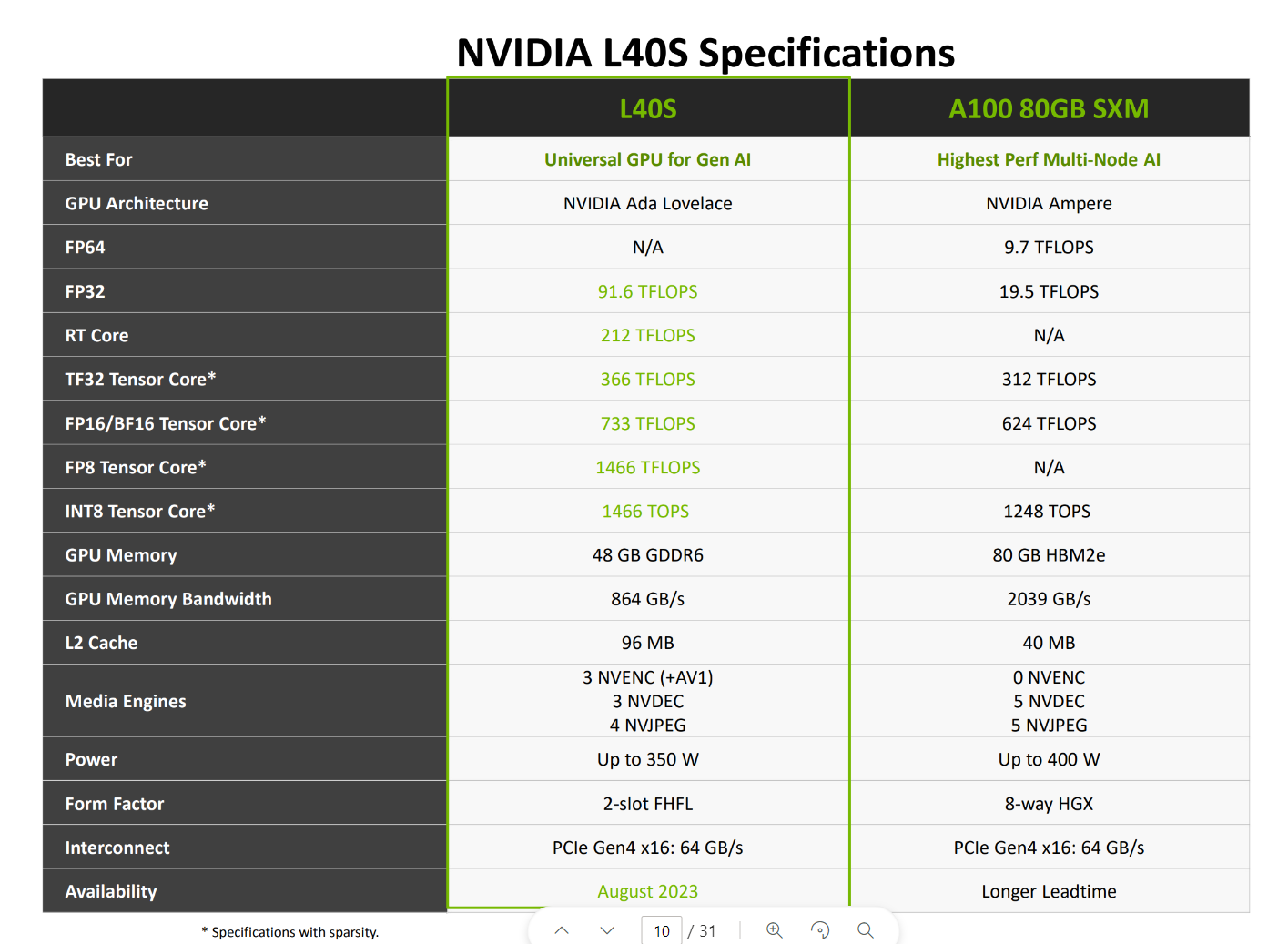

Here is a comparison of the L40S and A100 specifications:

Ultimately, it is crucial to consider your specific workload demands and project budget to make an informed decision regarding the appropriate GPU for your LLM endeavors.

While the NVIDIA A100 is a powerhouse GPU for LLM workloads, its state-of-the-art technology comes at a higher price point. The L40S, on the other hand, offers excellent performance and efficiency at an affordable cost.

Furthermore, it is worth noting that the L40S is immediately available for purchase, while the A100 is currently experiencing extended lead times. Coupled with the L40S’s performance and efficiency, this has led many customers to view the L40S as a highly appealing option—regardless of any concerns regarding lead times for alternative GPUs.

Choosing the right GPU for LLM inference and training is a critical decision that directly impacts model performance and productivity. The NVIDIA L40S offers a great balance between performance and affordability, making it an excellent option. You can find GPU server solutions from Thinkmate based on the L40S here.

Remember, as technology evolves, newer and more powerful GPUs will continue to emerge, so it is important to stay informed and reassess your requirements periodically. If you have questions about which technology is right for you, reach out to our solutions experts at tmsales@thinkmate.com.