Why These Systems, Why Now?

Work is entering a phase where AI does more than create—it decides, reasons, and acts. This next stage, often described as agentic AI, places far greater demands on computing infrastructure. The models behind these systems are larger, more complex, and need to deliver results in real time.

Traditional infrastructures that were sufficient for generative AI are no longer enough. To keep pace, professionals and organizations require platforms that bring together AI, graphics, and high-performance computing in a way that is both accessible and scalable, whether on the desktop or in the data center.

That’s where the new NVIDIA RTX PRO series comes in: desktop workstations and servers built on the Blackwell architecture, designed to handle the demands of today’s AI-driven workflows.

Workstations: Power for Professionals Across Disciplines

For individuals and teams, RTX PRO workstations provide a foundation for tackling complex tasks locally. They’re engineered for those who need real-time results and seamless interaction with data, models, or designs.

Key workloads include:

Workstation Comparison

| Feature / Metric | Previous Gen (Ada/L40S) | RTX PRO Blackwell (Workstations) | Advantage |

|---|---|---|---|

| Tensor Cores | 4th-gen | 5th-gen with FP4 support | Up to 3× AI performance |

| Ray Tracing | 3rd-gen RT Cores | 4th-gen RT Cores | 2× faster ray tracing |

| Memory | Up to 48 GB GDDR6 | Up to 96 GB GDDR7 | Handle much larger datasets |

| Multi-Instance GPU | Limited | Up to 4 MIG instances | Multiple workloads per GPU |

| Display Support | DP 1.4 | DP 2.1 | 8K at 240 Hz, 16K at 60 Hz |

For professionals used to long render times, sluggish simulations, or relying on cloud resources, these changes mean work can happen faster, closer to the point of creation, and with more control.

Servers: The Enterprise Backbone

If workstations are about enabling individuals, RTX PRO servers are about enabling organizations. They bring the same Blackwell architecture to the data center, scaling from single workloads to full “AI factories.”

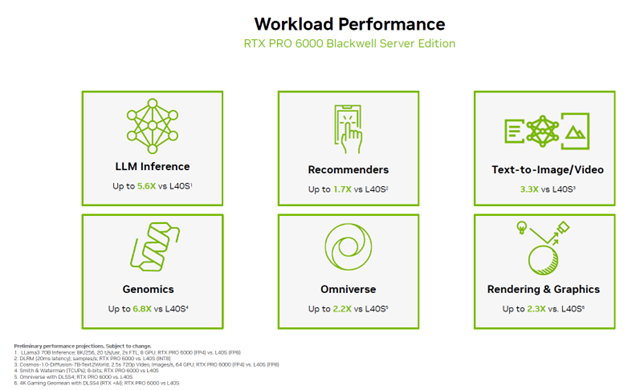

Accelerated Computing for Every Workload

The RTX PRO 6000 Blackwell Server Edition delivers significant gains across AI, science, and graphics workloads, helping teams work faster and more efficiently.

Servers Comparison

| Metric | CPU-Only Systems | NVIDIA H100 Systems | RTX PRO Servers (Blackwell) |

|---|---|---|---|

| AI Inference Performance | Baseline | Strong | 2× better price-performance than H100 for LLM inference |

| Performance Efficiency | 1× | ~10× | 18× more efficient than CPU-only |

| Rack Fit | Often bespoke | Larger footprint | Standard 2U servers |

| Workload Coverage | Limited | Primarily AI | AI, graphics, simulation, video |

For enterprises, this means adopting accelerated computing without tearing out existing infrastructure. RTX PRO servers can slot into standard 2U rack platforms, delivering a leap in capability without the need for an entirely new facility.

A Unified Platform for the Future

One of the most important aspects of the RTX PRO series is that both workstations and servers run on the same software stack. From CUDA-X libraries and NVIDIA Omniverse to NVIDIA AI Enterprise and NIM microservices, the tools are consistent.

That means a designer can prototype on a workstation, a researcher can finetune models locally, and an enterprise can deploy those models at scale—all on a unified platform. The result is less friction, faster iteration, and more predictable results across environments.

Looking Ahead

The shift to agentic AI, real-time simulation, and AI-driven design is accelerating. Organizations that want to stay ahead need infrastructure that’s not only powerful, but also flexible and ready for the next generation of workloads.

Explore RTX PRO workstations and servers at Thinkmate, designed for both creators and enterprises.