As the world of AI continues to evolve, the demand for more powerful and efficient tools for Large Language Model (LLM) inference has never been greater. The NVIDIA H100 NVL is setting a new standard in this space, offering an optimized platform that meets the intense computational demands of LLMs like Llama2 and GPT-3.

Why the H100 NVL for LLM Inference?

Real-World Impact:

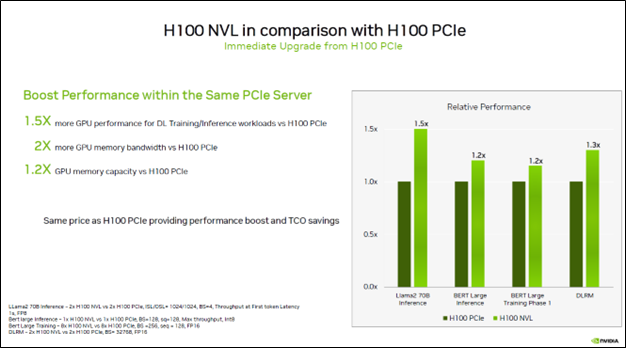

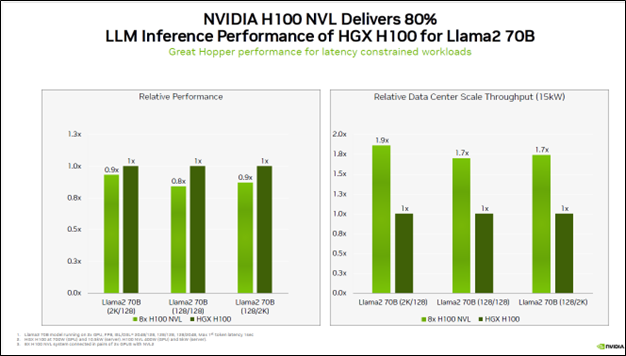

For applications like Llama2 70B inference, the H100 NVL achieves nearly double the performance of the H100 PCIe, delivering faster insights with lower latency. In environments where every millisecond counts, the H100 NVL ensures that your AI workloads run at peak efficiency, enabling quicker decision-making and more agile operations.

Easy Upgrade to NVIDIA H100 NVL

Consider upgrading from the H100 PCIe to the H100 NVL for a more powerful solution, especially in power-constrained data centers. Here are the top reasons why this upgrade makes sense:

Conclusion:

By delivering superior performance, enhanced memory capacity, and optimized power efficiency, the H100 NVL is the perfect solution for organizations looking to harness the full potential of large language models.

Ready to learn more? Explore how the H100 NVL can elevate your AI infrastructure and help you stay ahead in the rapidly advancing world of AI.